Converting 2D images to 3D images is crucial for video game developers, e-commerce companies, and animation companies, but it is not easy. Including the artificial intelligence (AI) research laboratories of technology giants such as Facebook and Nvidia, as well as some start-up companies are constantly exploring this field.

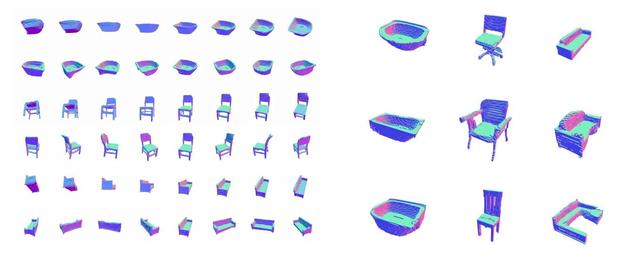

Recently, the research team of Microsoft Research (Microsoft Research) published a preprinted paper in which they introduced a new AI framework in detail. The framework uses “scalable” training technology to transform 2D images into 3D shapes. The simulation is generated. The researchers said that when using 2D images for training, the framework can always generate better 3D shapes than existing models. This is an excellent automation tool for game development, video production, animation and other fields.

Generally speaking, for a model framework to convert 2D to 3D images, it needs to perform differential step rendering through rasterization processing. Therefore, in the past, researchers in this field have focused on the development of custom rendering models. However, the images processed by this type of model will appear unrealistic and unsuitable for generating industrial renderings for games and graphics industries.

The Microsoft team uses an industrial renderer, which can generate images based on display data. In addition, the researchers also trained a 3D shape generation model to render the shape and generate images that match the distribution of the 2D data set. In other words, this is a novel proxy neural renderer that can directly render continuous voxel meshes generated by 3D shape generation models.

During the experiment, the research team adopted the 3D convolutional GAN architecture in the above-mentioned 3D shape generation model. GAN, also known as generative confrontation network, is a two-part AI model. It can synthesize images from different object categories based on the data set generated by the 3D model and the real data set. Angle to render.

In addition, the researchers stated in the paper that their new method also makes full use of the light and shadow information in the 2D photo. Specifically, it uses the exposure difference between the surfaces to detect the concave and convex surfaces, as well as the internal structure, In this way, the center of the three-dimensional object can be judged, so as to achieve better simulation training and generate a more realistic 3D model.

According to the researchers, their next plan is to integrate the whole set of methods into a relatively complete system, adding details such as colors, materials, and lighting, so as to create a “more comprehensive” data set of real photo conversion 3D models. .

(Editor in charge: admin)

0 Comments for “Using 2D images to generate 3D models, Microsoft’s new AI model may become a gospel for the game industry”